Published in Blog

How fast is Java 21?

Explore the performance of Java 21 against Java 17, revealing minor improvements in micro-benchmarks and real-world scenarios.

With the release of Java 21 just around the corner, you may be wondering how it compares to Java 17 and whether you should upgrade. Here at Timefold, so were we. Read on to find out how Timefold Solver performs on Java 21, compared to Java 17.

But first, let’s get some things out of the way.

What is Java 21 and how to get it

Java 21 is a new release of the Java platform, the trusty programming language that Timefold Solver is written in. It brings a bunch of new features, as well as the usual bugfixes and smaller improvements.

Java 21 will be generally available on September 19, 2023, but you can already try it out using the release candidate builds. We find that the easiest way to get started with Java 21 is to use SDKMAN, and that is what did as well. (See the end of this post for the specific versions and hardware used.)

Like Java 17 before it, Java 21 is a long-term support (LTS) release; it will stick around for quite a while. It is therefore a good idea to start using it as soon as possible and see if it works for you.

For Timefold Solver, that means making sure the entire codebase continues to work flawlessly on Java 21, as well as running some benchmarks to ensure our users can expect at least the same performance as before. Let’s get started on that.

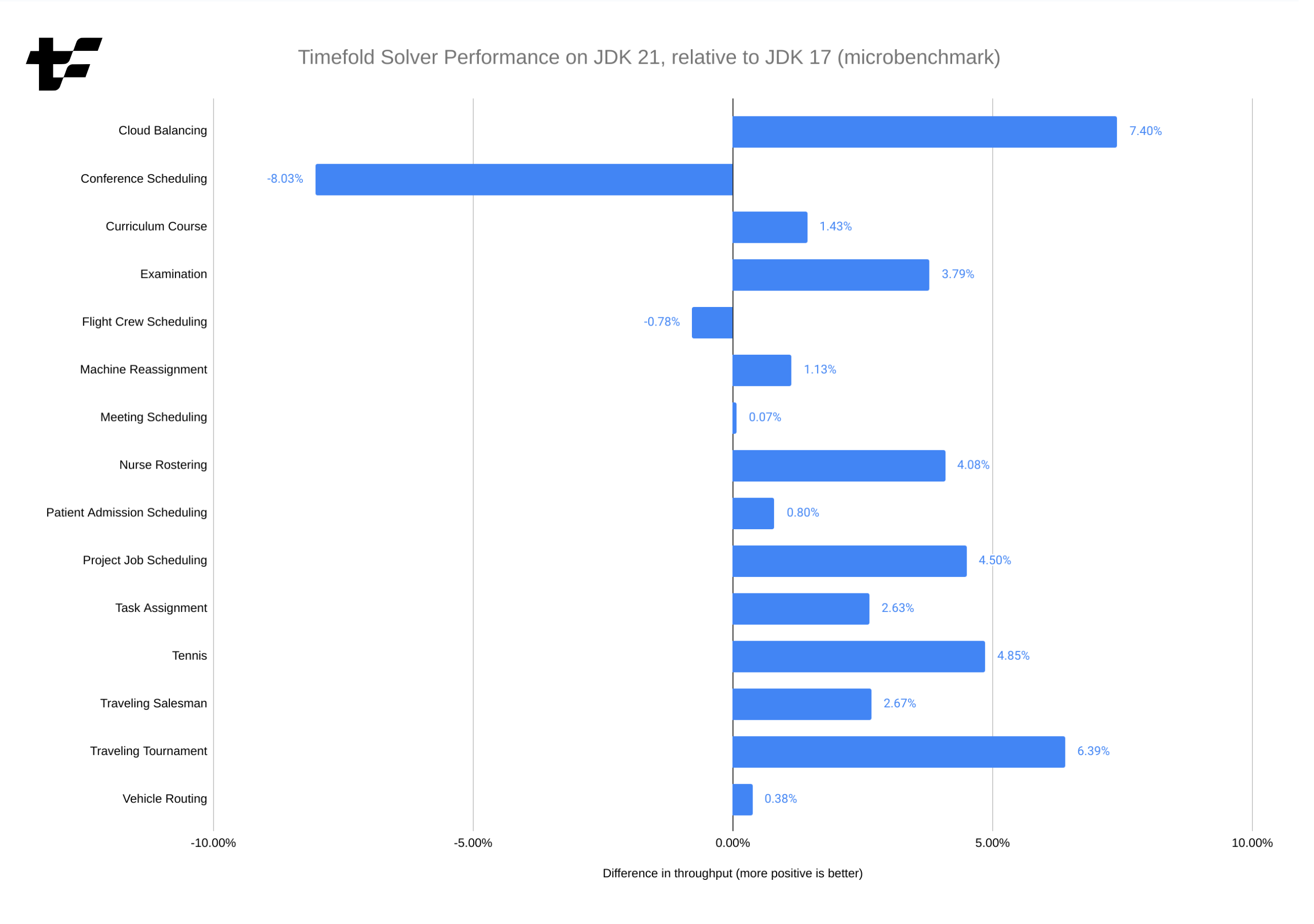

Micro-benchmarks

We’ll start with score director micro-benchmarks, which we use regularly to establish the impact of various changes on the performance of Constraint Streams. These benchmarks do not run the entire solver; rather, they focus exclusively on the score calculation part of the solver.

They are implemented using Java Microbenchmark Harness (JMH), and they run in many Java Virtual Machine (JVM) forks and with sufficient warmup. This gives us a good level of confidence in the results. In fact, the margin of error on these numbers is only ± 2%.

Here is the Constraint Streams performance on Java 21 vs. Java 17:

Most cases show a small performance improvement when switching to Java 21. The "Conference Scheduling" benchmark is the only outlier, and with some extra work on the solver we can likely get the performance of that benchmark to improve as well.

It should be noted that we ran these benchmarks with ParallelGC as the garbage collector (GC),

instead of the default G1GC.

Later in this post we’ll explain why.

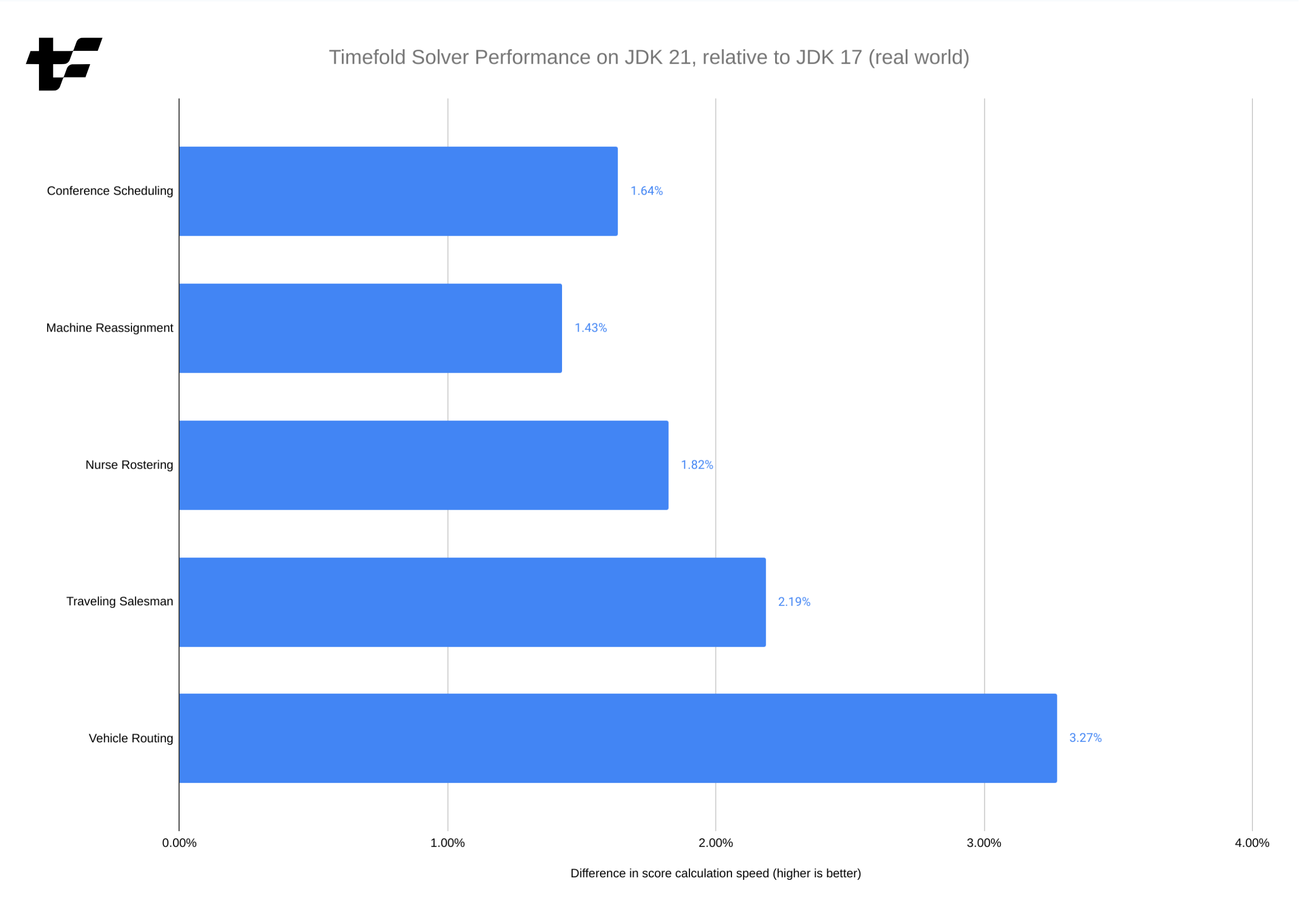

Real-world benchmarks

Now that we’ve seen the micro-benchmarks, it’s time to compare them to real-world solver performance. This includes the entire solver, not just the score calculation part.

We ran the solver manually in 10 different JVM forks, and used the median score calculation speed.

We selected a subset of the available benchmarks, to keep the run time short;

the selection is representative of the entire benchmark suite in terms of heuristics used and code paths exercised.

Once again, ParallelGC is used as the garbage collector.

Here are the results:

There are no surprises here. We see small performance improvements across the board, confirming the results of the micro-benchmarks. Compared to the micro-benchmarks, "Conference Scheduling" no longer registers as an outlier, which is interesting and will serve as another data point in our investigation into that possible regression.

Since we haven’t established a formal interval of confidence for these large benchmarks, we can’t say with certainty that the improvements are statistically significant. However, the fluctuations observed between runs have been small enough to give us confidence in the results.

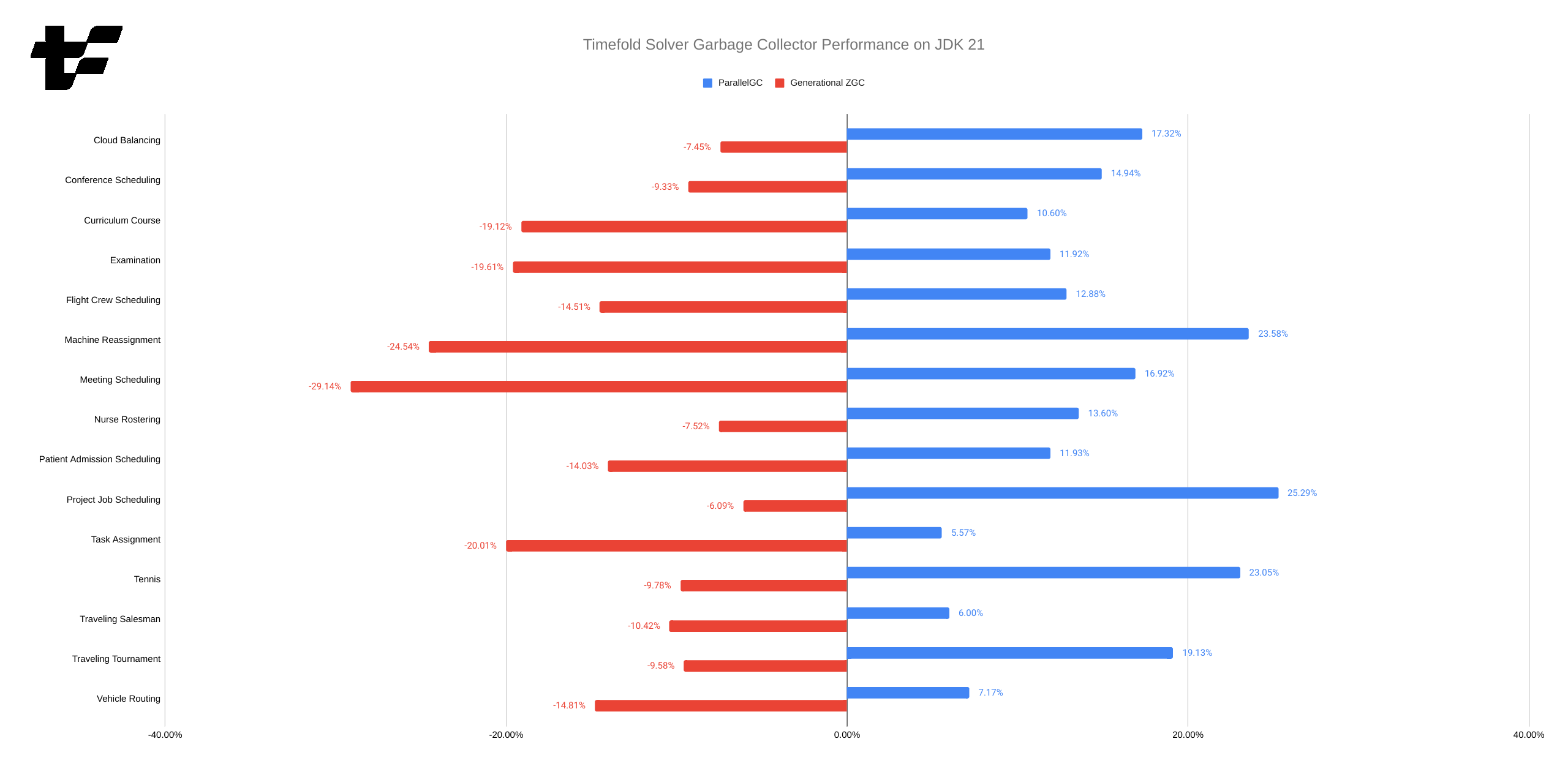

Why ParallelGC?

In the years that we’ve been working with the Timefold Solver and its predecessor, OptaPlanner,

we’ve found that ParallelGC is the best garbage collector for the solver.

This should not be surprising - ParallelGC is tailored for high throughput,

and the solver is 100 % CPU-bound.

G1GC (the default garbage collector) is instead tailored for low latency,

and that makes a considerable difference.

However, things change and we occasionally need to challenge our assumptions.

Is ParallelGC still the best GC for the solver?

The following chart shows the difference in performance between G1GC (the baseline) and ParallelGC.

And since Java 21 introduced generational ZGC, another GC aiming for low latency,

we thought it would be interesting to include that as well.

The results (obtained by the micro-benchmarks from earlier) are clear:

-

ParallelGCcontinues to be the best GC for the solver. -

G1GCcomes second, but it’s considerably slower. -

ZGC is by far the worst of the three.

The situation may change if we increased the heap size available to the JVM as ParallelGC does not scale well with large heaps,

but with -Xmx1G, it is the clear winner.

(And 1GB of heap is more than enough for many use cases of Timefold Solver.)

Conclusion

In this post, we’ve shown that:

-

Timefold Solver 1.1.0 works perfectly fine with Java 21, no changes are needed.

-

Switching to Java 21 may bring a small performance improvement to your Timefold Solver application, but your mileage may vary slightly.

-

ParallelGCcontinues to be the best garbage collector for the solver.

We encourage you to try Java 21 and make the switch. It is free after all, and you’ll be able to enjoy the latest and greatest Java platform.

Appendix A: Reproducing the results

These benchmarks use Timefold Solver 1.1.0, the latest version at the time of writing.

All benchmarks were run on Fedora Linux 38, with Intel Core i7-12700H CPU and 32 GB of RAM.

We used OpenJDK Runtime Environment Temurin-17.0.8+7 (build 17.0.8+7) (available as 17.0.8-tem on SDKMAN)

and OpenJDK Runtime Environment (build 21+35-2513) (available as 21.ea.35-open on SDKMAN).

Source code of the micro-benchmarks can be found in my own personal repository.

The real-world benchmarks were run using timefold-solver-benchmark,

this configuration and a simple script.

(You’ll need timefold-solver-examples on the classpath.)

All data used for the charts can be found in this spreadsheet.